In previous posts, I’ve shown you how to deploy an SDDC in Google Cloud VMware Engine, connect the SDDC to a VPC, and deploy a bastion host for managing your environment. In this post, we’ll take a pause on deploying anything new to take a closer look at our SDDC. This post will provide an overview of the networking configuration and capabilities, and how to connect to it from an external site.

Other posts in this series:

- Deploying a GCVE SDDC with HCX

- Connecting a VPC to GCVE

- Bastion Host Access with IAP

- HCX Configuration

- Common Networking Scenarios

SDDC Networking Overview

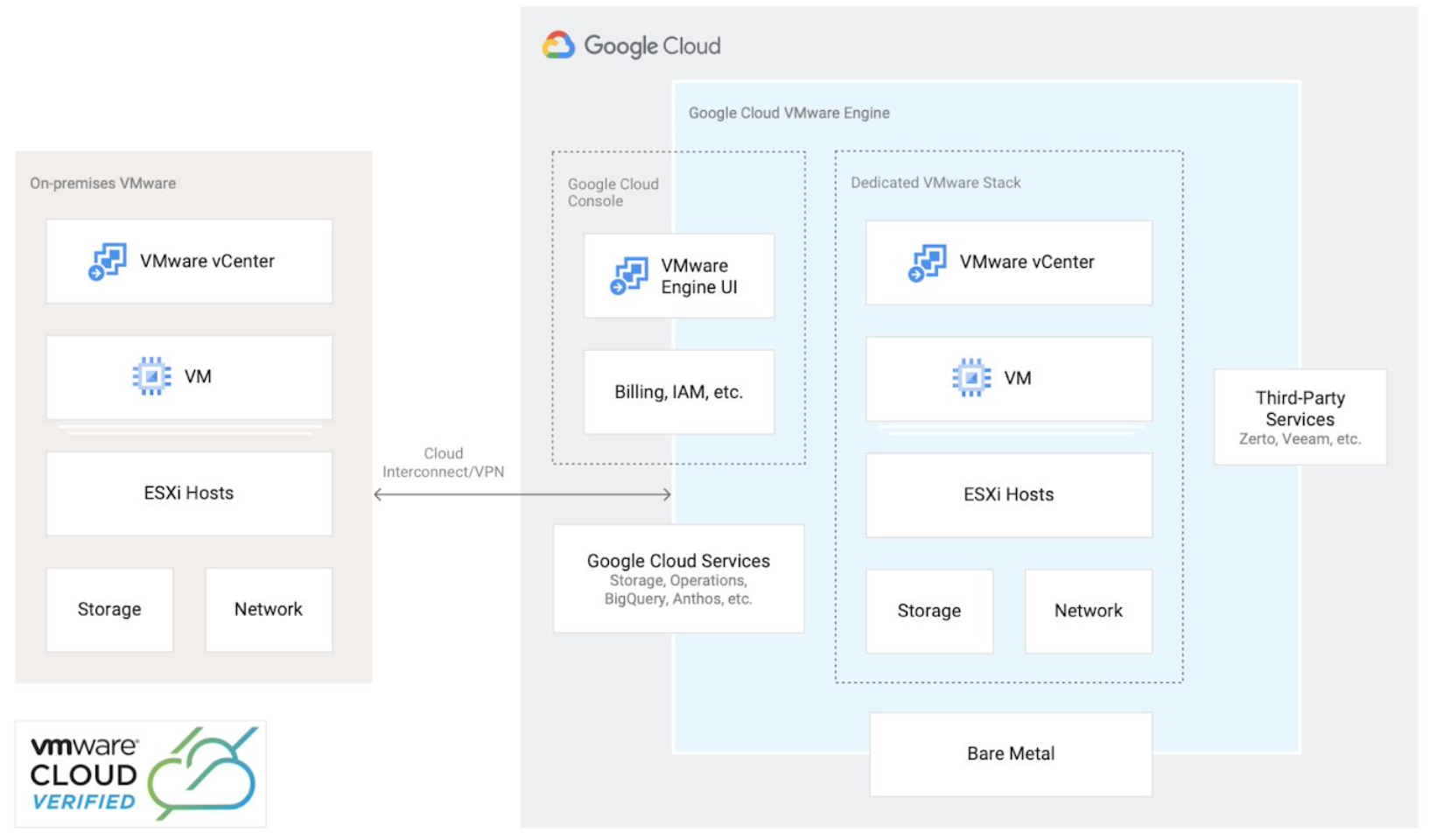

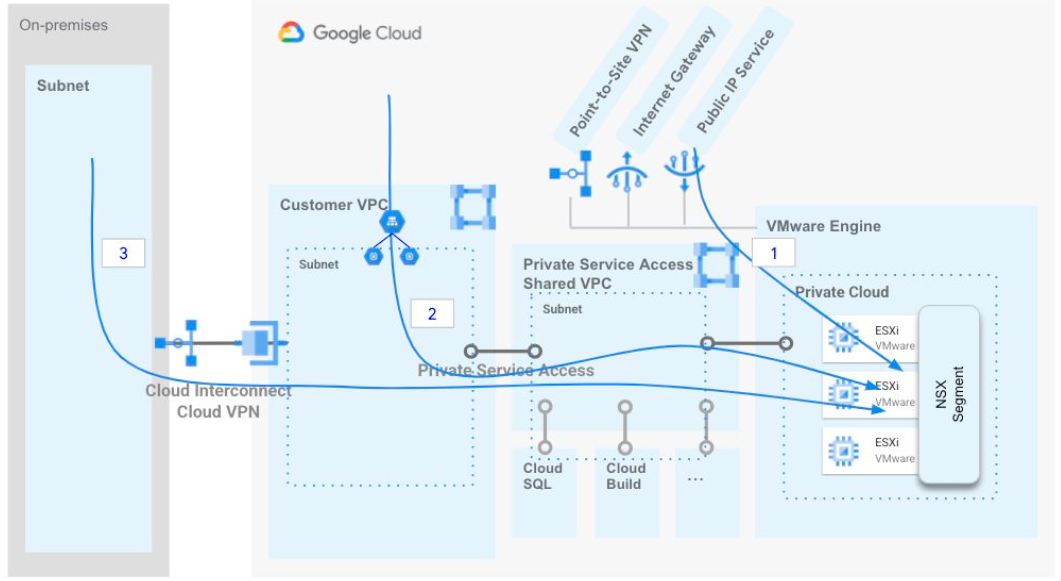

Google Cloud VMware Engine Overview by Google, licensed under CC BY 3.0

Google Cloud VMware Engine Overview by Google, licensed under CC BY 3.0

An SDDC running in GCVE consists of VMware vSphere, vCenter, vSAN, NSX-T, and optionally HCX, all running on top of Google Cloud infrastructure. Let’s take a peek at an SDDC deployment.

VDS and N-VDS Configuration

Configuration of the single VDS in the SDDC is basic, and used to provide connectivity for HCX. The VLANs listed are locally significant to Google’s infrastructure and not something we need to worry about.

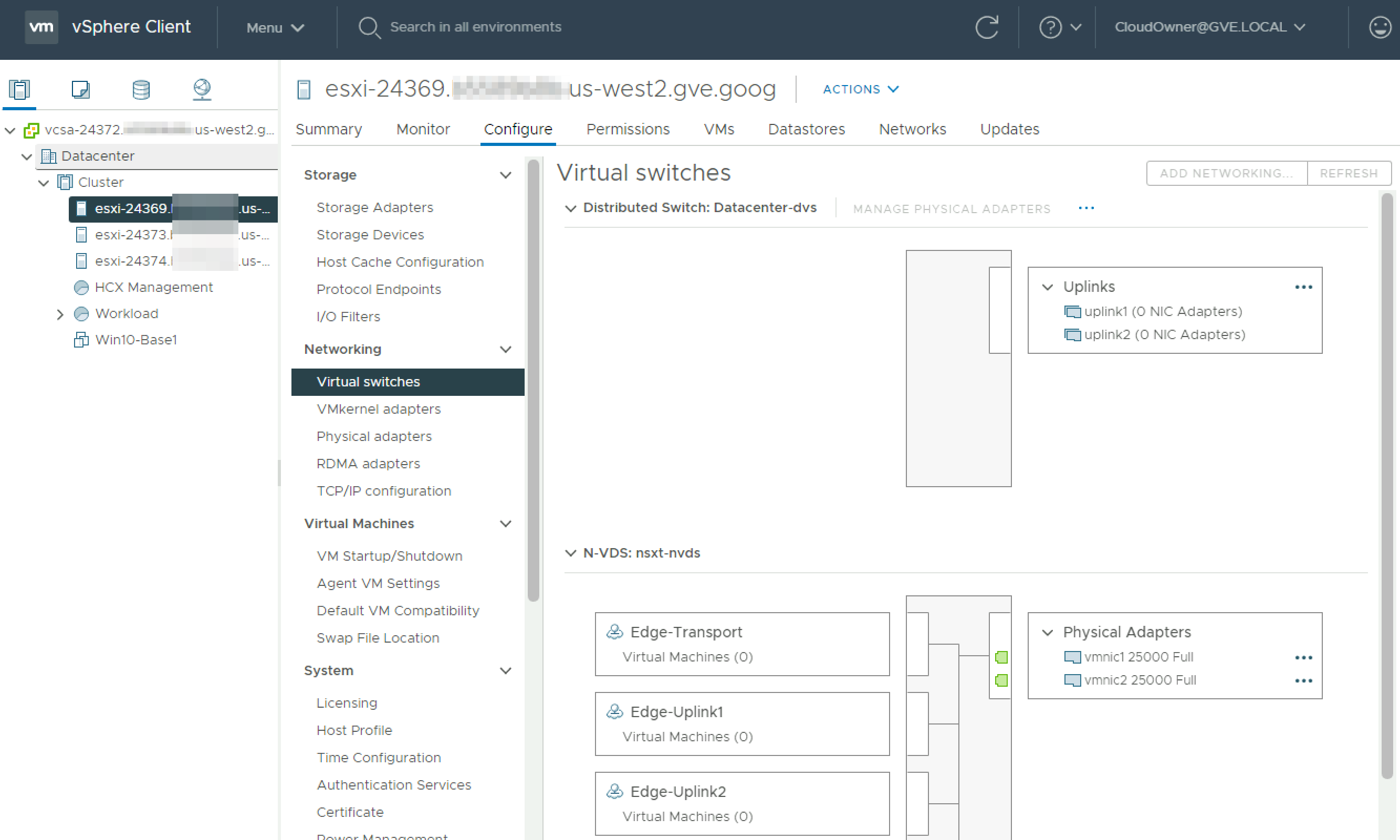

The virtual switch settings for one of the ESXi hosts provides a better picture of the networking landscape. Here we can see both the vanilla VDS deployed, along with the N-VDS managed by NSX-T. Almost all of the networking configuration we will perform will be in NSX-T, but I wanted to show the underlying configuration for curious individuals.

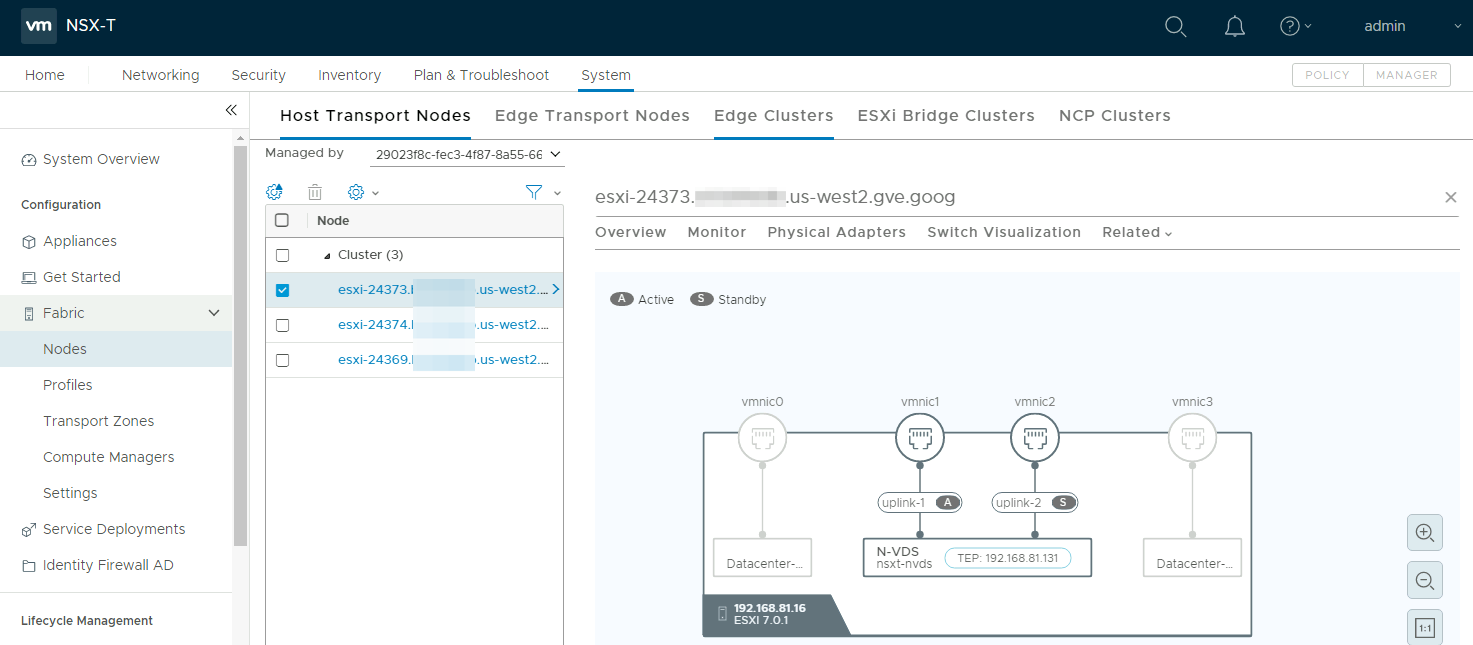

We’ll look at NSX-T further below, but this screenshot from NSX-T is a simple visualization of the N-VDS deployed.

VMkernel and vmnic Configuration

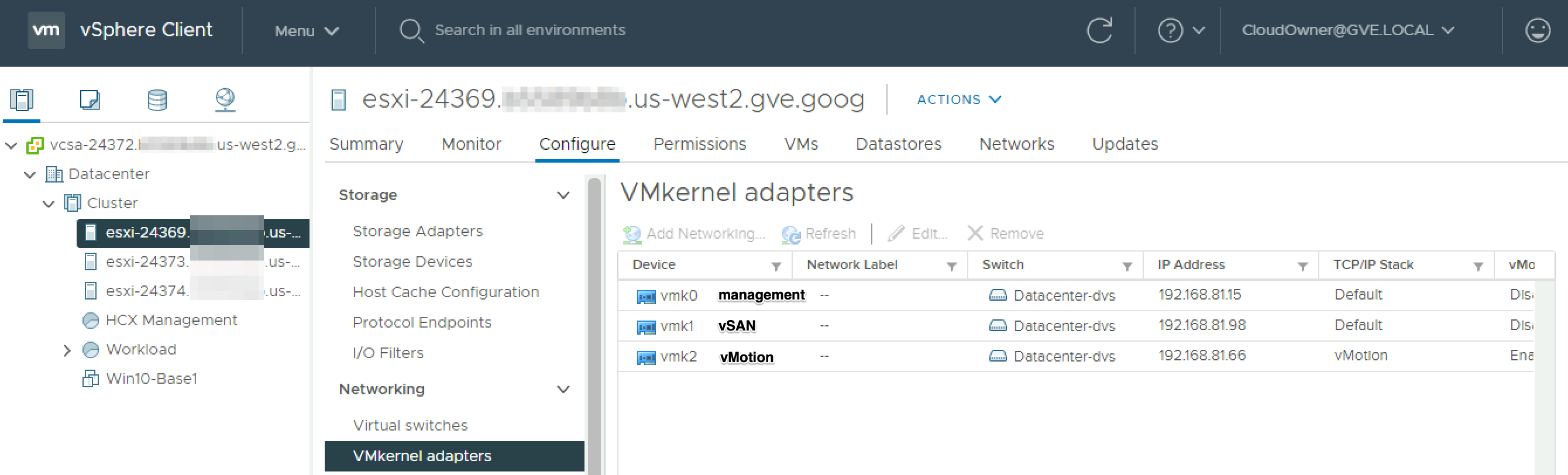

VMkernel configuration is straightforward, with dedicated adapters for management, vSAN, and vMotion. The IP addresses correspond with the management, vSAN, and vMotion subnets that were automatically created when the SDDC was deployed.

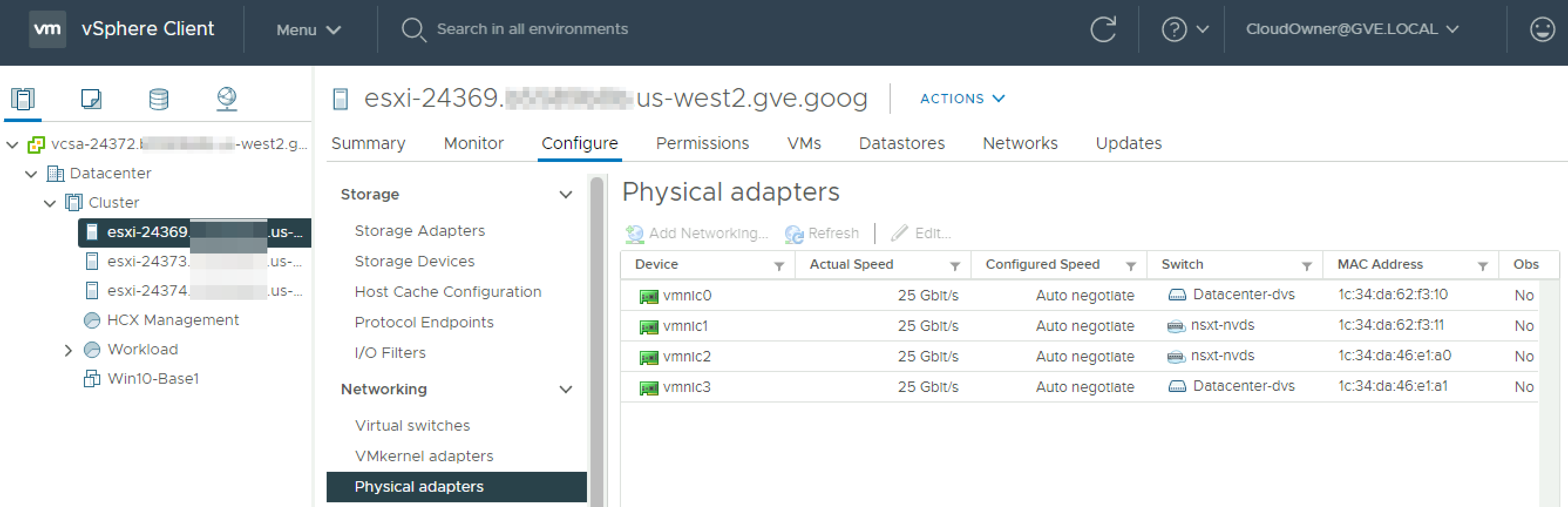

There are four 25 Gbps vmnics (physical adapters) in each host, providing an aggregate of 100 Gbps per host. Two vmnics are dedicated to the VDS, and two are dedicated to the N-VDS.

NSX-T Configuration

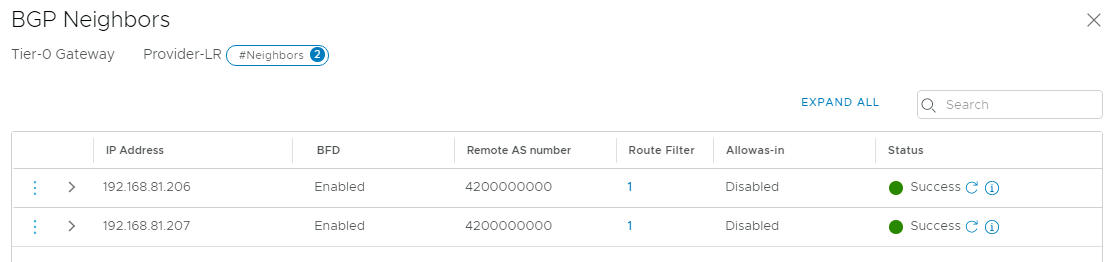

The out-of-the-box NSX-T configuration for GCVE should look very familiar to you if you have ever deployed VMware Cloud Foundation. The T0 router has redundant BGP connections to Google’s infrastructure.

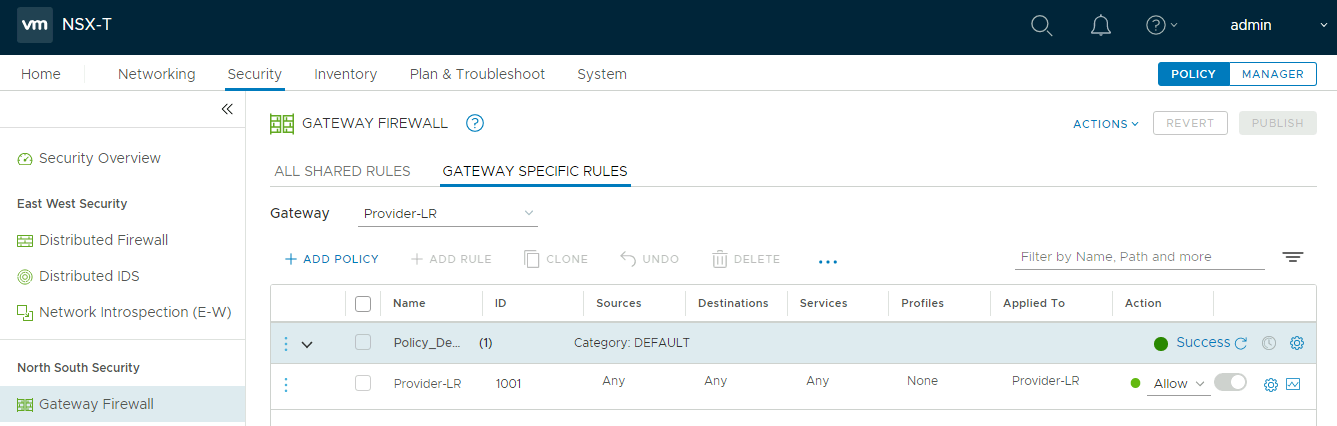

There are no NAT rules configured, and the firewall has a default allow any any rule. This may not be what you were expecting, but by the end of this post, it should be more clear. We will look at traffic flows in the SDDC Networking Capabilities section below.

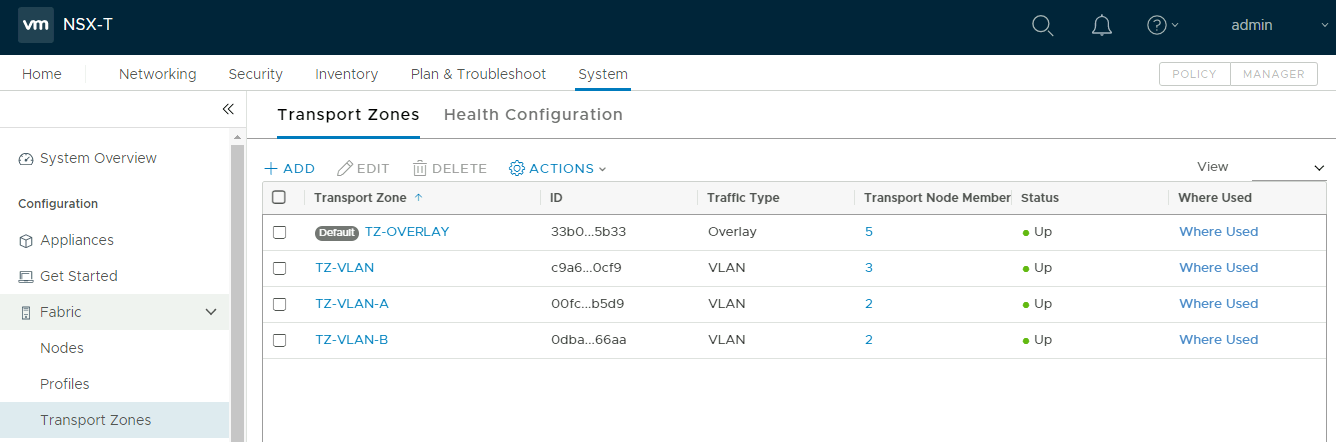

The configured transport zones consist of three VLAN TZs, and a single overlay TZ. The VLAN TZs facilitate the plumbing between the T0 router and Google infrastructure for BGP peering. The TZ-OVERLAY zone is where workload segments will be placed.

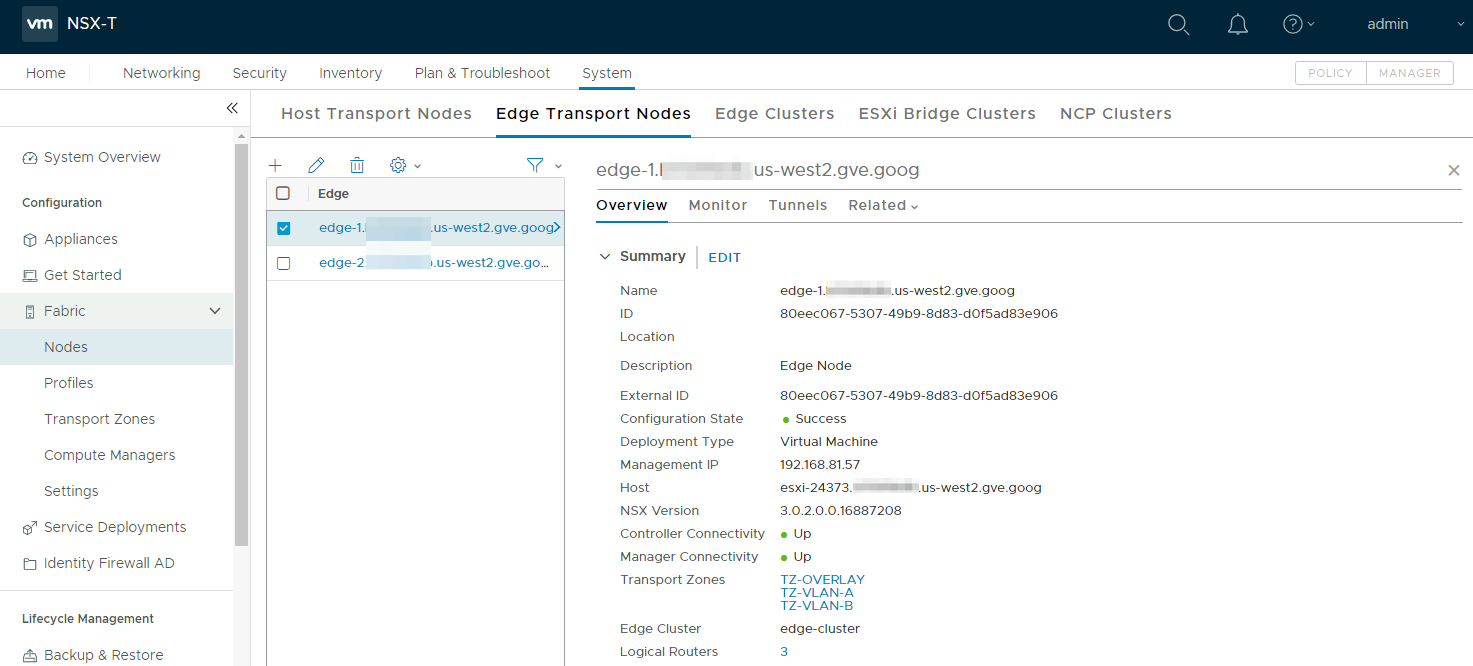

Finally, there is one edge cluster consisting of two edge nodes to host the NSX-T logical routers.

SDDC Networking Capabilities

Now that we’ve peeked behind the curtain, let’s talk about what you can actually do with your SDDC. This is by no means an exhaustive list, but here are some common use cases:

- Create workload segments in NSX-T

- Expose VMs or services to the internet via public IP

- Leverage NSX-T load balancing capabilities

- Create north-south firewall policies with the NSX-T gateway firewall

- Create east-west firewall policies (i.e., micro-segmentation) with the NSX-T distributed firewall

- Access and consume Google Cloud native services

- Migrate VMs from your on-prem data center to your GCVE SDDC with VMware HCX

I will be covering many of these topics in future posts, including automation examples. Next, let’s look at the options for ingress and egress traffic.

Egress Traffic

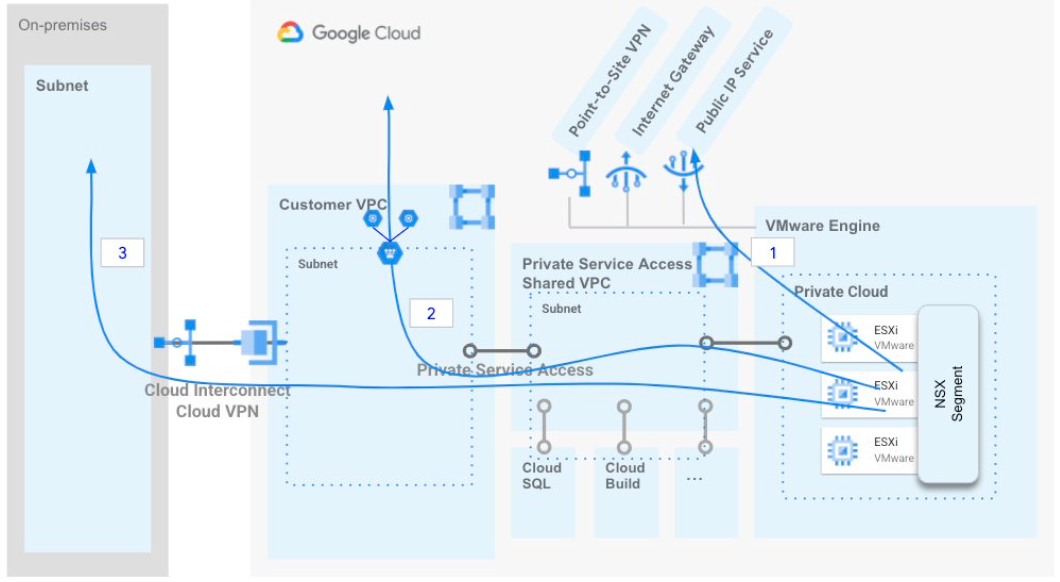

Google Cloud VMware Engine Egress Traffic Flows by Google, licensed under CC BY 3.0

Google Cloud VMware Engine Egress Traffic Flows by Google, licensed under CC BY 3.0

One of the strengths of GCVE is that it provides you with options. As you can see on this diagram, you have three options for egress traffic:

- Egress through the GCVE internet gateway

- Egress through an attached VPC

- Egress through your on-prem data center via Cloud Interconnect or Cloud VPN

In Deploying a GCVE SDDC with HCX, I walked through the steps to enable Internet Access and Public IP Service for your SDDC. This is all that is needed to provide egress internet access through the internet gateway. Internet-bound traffic will be routed from the T0 router to the internet gateway, which NATs all traffic behind a public IP.

Egress through an attached VPC or on-prem datacenter requires additional steps that are beyond the scope of this post, but I will provide documentation links at the end of this post for these scenarios.

Ingress Traffic

Google Cloud VMware Engine Ingress Traffic Flows by Google, licensed under CC BY 3.0

Google Cloud VMware Engine Ingress Traffic Flows by Google, licensed under CC BY 3.0

Ingress traffic to GCVE follows similar paths as egress traffic. You can ingress via the public IP service, connected VPC, or through your on-prem data center. Using the public IP service is the least complicated option and requires that you’ve enabled Public IP Service for your SDDC.

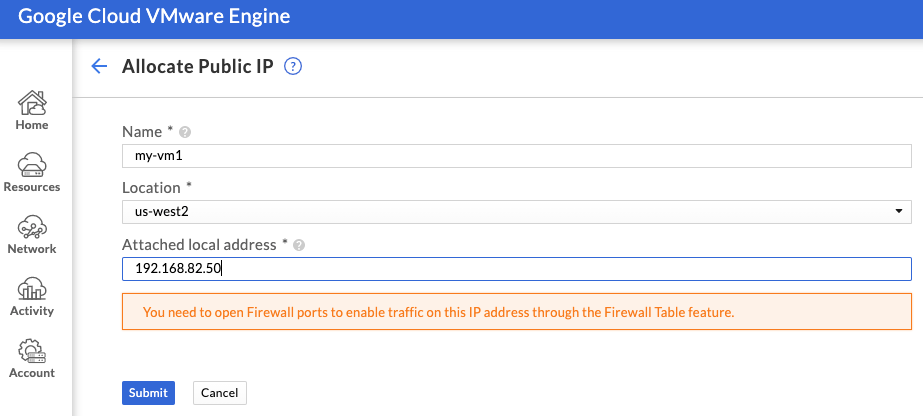

Public IPs are not assigned directly to VM. Instead, a public IP is allocated and NATed to a private IP in your SDDC. is You can allocate a public IP in the GCVE portal by supplying a name for the IP allocation, region, and the private address.

Connecting to your SDDC

My previous post, Deploying a GCVE SDDC with HCX, outlines the steps to set up client VPN access to your SDDC, and Bastion Host Access with IAP provides an example bastion host setup for managing your SDDC. These are “day 1” options for connectivity, so you will likely need some other method to connect to your on-prem data center to your GCVE SDDC. I covered cloud connectivity options in Cloud Connectivity 101, and many of the methods outlined that post are available for connecting to GCVE. Today, your options are to use Cloud Interconnect or an IPSec tunnel via Cloud VPN or NSX-T IPSec VPN.

In our lab, we are lucky to have a connection to Megaport, so I am using Partner Interconnect for my testing with GCVE. This is a very easy solution for connecting to the cloud, and their documentation provides simple step-by-step instructions to get up and running. Once complete, BGP peering will be established between the Megaport Cloud Router and a Google Cloud Router.

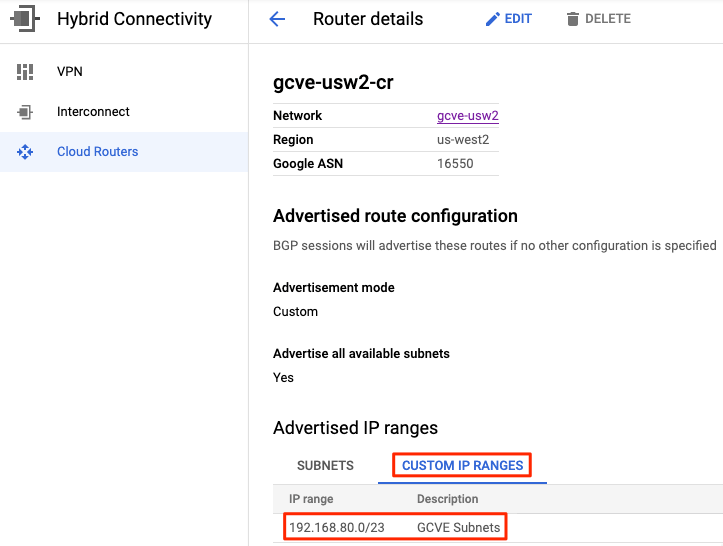

Advertising Routes to GCVE

VPC peering in Google Cloud does not support transitive routing. This means that I had to add a custom advertised IP range for my GCVE subnets to the Google Cloud Router. After adding this configuration, I was able to ping IPs in my SDDC. You will need to configure your DNS server to resolve queries for gve.goog to be able to access vCenter, NSX and HCX by their hostnames.

ICMP in GCVE

One nuance in GCVE that threw me off is that ICMP is not supported by the internal load balancer, which is in the path for egress traffic if you are using the internet gateway. Trying to ping 8.8.8.8 will fail, even if your SDDC is correctly connected to the internet. To test internet connectivity from a VM in your SDDC, use another tool like curl or follow the instructions here to install tcpping for testing.

Next Steps

Next, we will stage our SDDC networking segments and connect HCX to begin migrating workloads to GCVE. I highly recommend you read the Private cloud networking for Google Cloud VMware Engine whitepaper, which goes into many of the subjects I’ve touched on in this blog in greater detail.