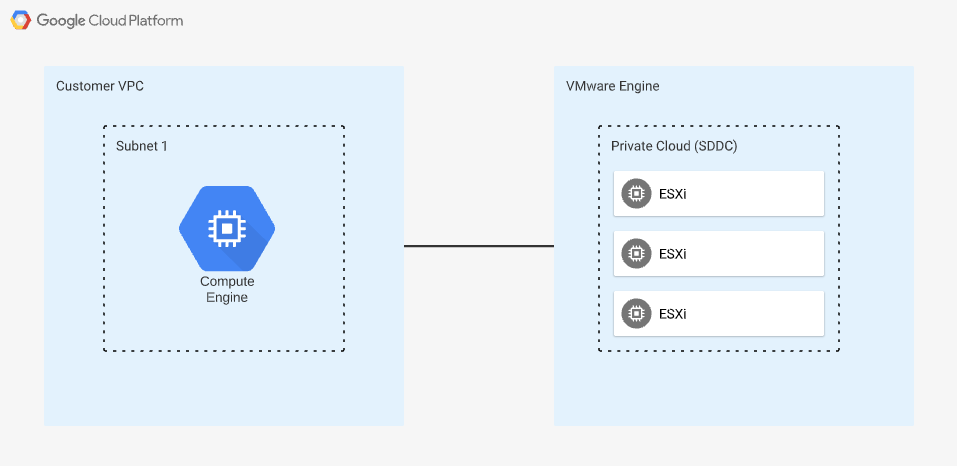

My previous post walked through deploying an SDDC in Google Cloud VMware Engine (GCVE). This post will show the process of connecting a VPC to your GCVE environment, and we will use Terraform to do the vast majority of the work. The diagram below shows the basic concept of what I will be covering in this post. Once connected, you will be able to communicate from your VPC to your SDDC and vice versa. If you would like to complete this process using the cloud console instead of Terraform, see Setting up private service access in the VMware Engine documentation.

Other posts in this series:

- Deploying a GCVE SDDC with HCX

- Bastion Host Access with IAP

- Network and Connectivity Overview

- HCX Configuration

- Common Networking Scenarios

I’m assuming you have a working SDDC deployed in VMware Engine and some basic knowledge of how Terraform works so you can use the provided Terraform examples. If you have not yet deployed an SDDC, please do so before continuing. If you need to get up to speed with Terraform, browse over to https://learn.hashicorp.com/terraform. All of the code referenced in this post will be available at https://github.com/shamsway/gcp-terraform-examples in the gcve-network sub-directory. You will need to have git installed to clone the repo, and I highly recommend using Visual Studio Code with the Terraform add-on installed to view the files.

Private Service Access Overview

GCVE SDDCs can establish connectivity to native GCP services with private services access. This feature can be used to establish connectivity from a VPC to a third-party “service producer,” but in this case, it will simply plumb connectivity between our VPC and SDDC. Configuring private services access requires allocating one or more reserved ranges that cannot be used in your local VPC network. In this case, we will supply the ranges that we have allocated for our VMware Engine SDDC networks. Doing this prevents issues with overlapping IP ranges.

Leveraging Terraform for Configuration

I have provided Terraform code that will do the following:

- Create a VPC network

- Create a subnet in the new VPC network that will be used to communicate with GCVE

- Create two Global Address pools that will be used to reserve addresses used in GCVE

- Create a private connection in the new VPC, using the two Global Address pools as reserved ranges

- Enable import and export of custom routes for the VPC

After Terraform completes configuration, you will be able to establish peering with the new VPC in GCVE. To get started, clone the example repo with git clone https://github.com/shamsway/gcp-terraform-examples.git, then change to the gcve-network sub-directory. You will find these files:

main.tf– Contains the primary Terraform code to complete the steps mentioned abovevariables.tf– Defines the input variables that will be used inmain.tfterraform.tfvars– Supplies values for the input variables defined invariables.tf

Let’s take a look at what is happening in main.tf, then we will supply the necessary variables in terraform.tfvars and run Terraform. You will see var.[name] appear over and over in the code, as this is how Terraform references variables. You may think it would be easier to place the desired values directly into main.tf instead of defining and supplying variables, but it is worth the time to get used to using variables with Terraform. Hardcoding values in your code is rarely a good idea, and most Terraform code that I have consumed from other authors use variables heavily.

main.tf Contents

provider "google" {

project = var.project

region = var.region

zone = var.zone

}

The file begins with a provider block, which is common in Terraform. This block defines the Google Cloud project, region, and zone in which Terraform will create resources. The values used are specified in terraform.tfvars, which is the same method we will use throughout this example.

resource "google_compute_network" "vpc_network" {

name = var.network_name

description = var.network_descr

auto_create_subnetworks = false

}

The first resource block creates a new VPC in the region and zone specified in the provider block. Setting auto_create_subnetworks to false specifies that we want a custom VPC instead of auto-creating subnets for each region.

resource "google_compute_subnetwork" "vpc_subnet" {

name = var.subnet_name

ip_cidr_range = var.subnet_cidr

region = var.region

network = google_compute_network.vpc_network.id

}

The next block creates a subnet in the newly created VPC. Notice that the last line references google_compute_network.vpc_network.id for the network value, meaning that it uses the ID value of the VPC created by Terraform.

resource "google_compute_global_address" "private_ip_alloc_1" {

name = var.reserved1_name

address = var.reserved1_address

purpose = var.address_purpose

address_type = var.address_type

prefix_length = var.reserved1_address_prefix_length

network = google_compute_network.vpc_network.id

}

This block and the following block (google_compute_global_address.private_ip_alloc_2) create a private IP allocation used for the private services configuration.

resource "google_service_networking_connection" "gcve-psa" {

network = google_compute_network.vpc_network.id

service = var.service

reserved_peering_ranges = [google_compute_global_address.private_ip_alloc_1.name, google_compute_global_address.private_ip_alloc_2.name]

depends_on = [google_compute_network.vpc_network]

}

These last two blocks are where things get interesting. The block above configures the private services connection using the VPC network and private IP allocation created by Terraform. Service is a specific string, servicenetworking.googleapis.com, since Google is the service provider in this scenario. This value is set in terraform.tfvars, as we will see in a moment. If you find this confusing, check the available documentation for this resource, and it should help you to understand it.

resource "google_compute_network_peering_routes_config" "peering_routes" {

peering = var.peering

network = google_compute_network.vpc_network.name

import_custom_routes = true

export_custom_routes = true

depends_on = [google_service_networking_connection.gcve-psa]

}

The final block enables the import and export of custom routes for our VPC peering configuration.

Note that the final two blocks contain an argument that none of the others do: depends_on. The Terraform documentation describes depends_on in-depth here, but basically, this is a hint for Terraform to describe resources that rely on each other. Typically, Terraform can determine this automatically, but there are occasional cases where this statement needs to be used. Running terraform destroy without this argument in place may lead to errors, as Terraform could delete the VPC before removing the private services connection or route peering configuration.

terraform.tfvars Contents

terraform.tfvars is the file that defines all the variables that are referenced in main.tf. All you need to do is supply the desired values for your environment, and you are good to go. Note that the variables below are all examples, so simply copying and pasting may not lead to the desired result.

project = "your-gcp-project"

region = "us-west2"

zone = "us-west2-a"

network_name = "gcve-usw2"

network_descr = "Network for testing of GCVE in USW2"

subnet_name = "gcve-usw2-mgmt"

subnet_cidr = "192.168.82.0/24"

reserved1_name = "gcve-managemnt-ip-alloc"

reserved1_address = "192.168.80.0"

reserved1_address_prefix_length = 23

reserved2_name = "gcve-workload-ip-alloc"

reserved2_address = "192.168.84.0"

reserved2_address_prefix_length = 23

address_purpose = "VPC_PEERING"

address_type = "INTERNAL"

service = "servicenetworking.googleapis.com"

peering = "servicenetworking-googleapis-com"

Additional information on the variables used is available in README.md. You can also find information on these variables, including their default values should one exist, in variables.tf.

Initializing and Running Terraform

Terraform will use Application Default Credentials to authenticate to Google Cloud. Assuming you have the gcloud cli tool installed, you can set these by running gcloud auth application-default. Additional information on authentication can be found in the Getting Started with the Google Provider Terraform documentation. To run the Terraform code, follow the steps below.

Following these steps will create resources in your Google Cloud project, and you will be billed for them.

- Run

terraform initand ensure no errors are displayed - Run

terraform planand review the changes that Terraform will perform - Run

terraform applyto apply the proposed configuration changes

Should you wish to remove everything created by Terraform, run terraform destroy and answer yes when prompted. This will only remove the VPC network and related configuration created by Terraform. Your GCVE environment will have to be deleted using these instructions, if desired.

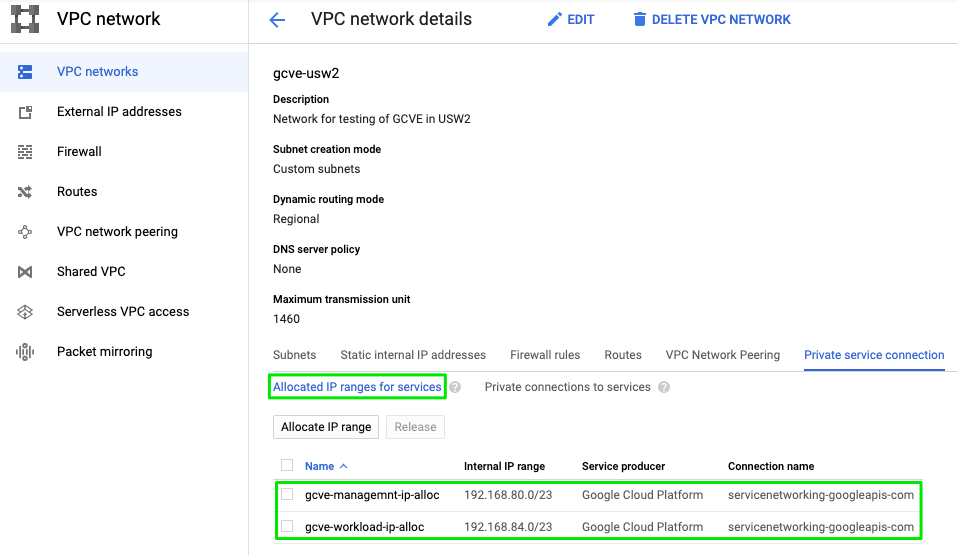

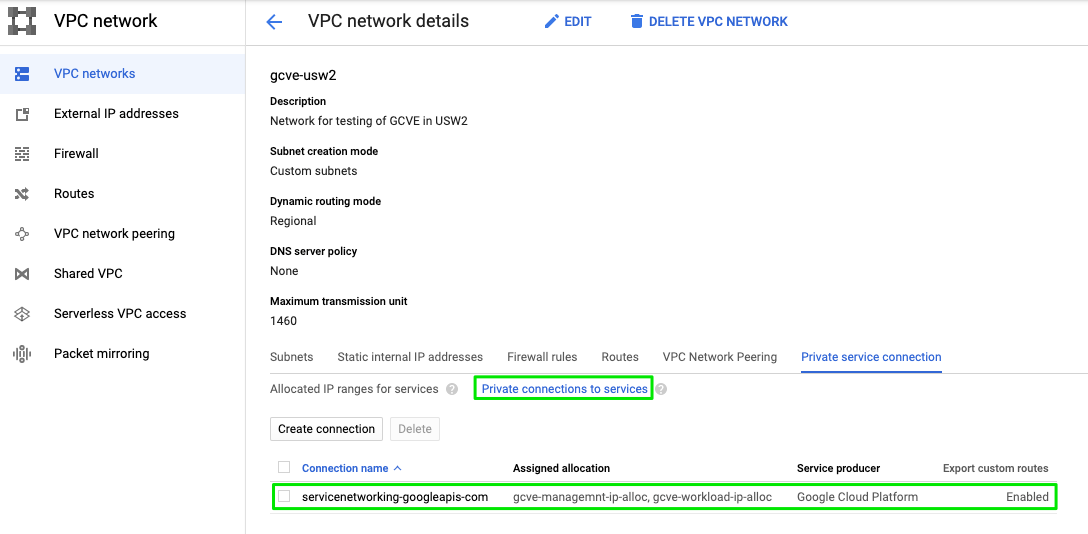

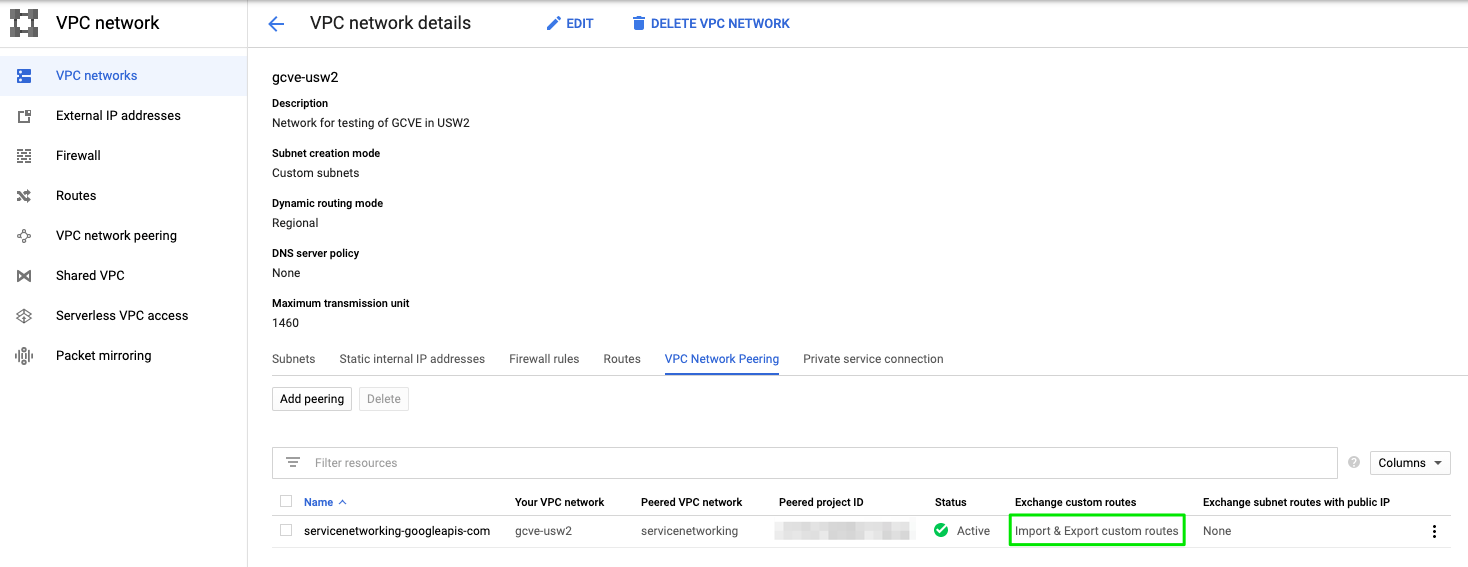

Review VPC Configuration

Once terraform apply completes, you can see the results in the Google Cloud Console.

IP ranges allocated for use in GCVE are reserved.

Private service access is configured.

Import and export of custom routes on the servicenetworking-googleapis-com private connection is enabled.

Complete Peering in GCVE

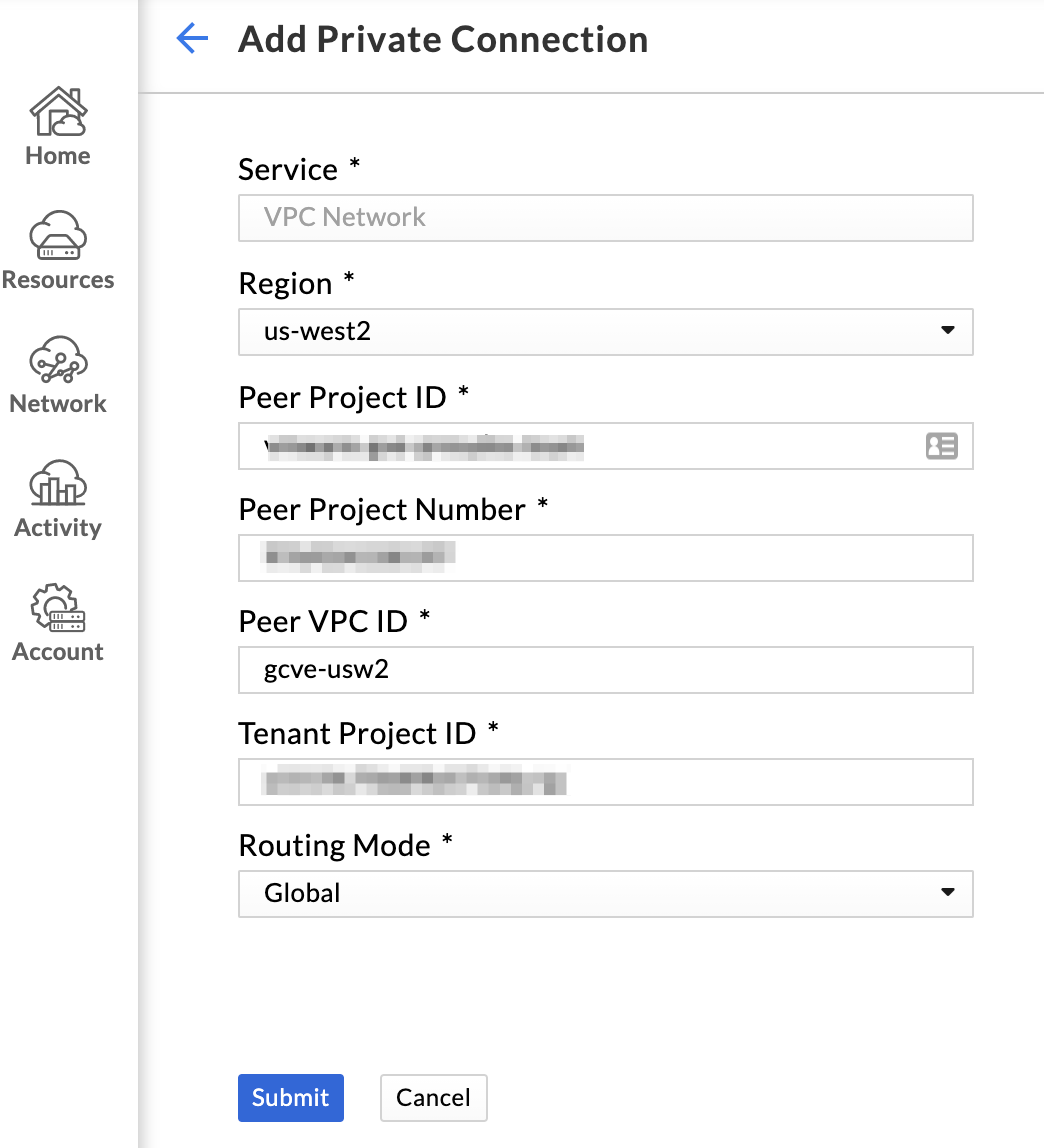

The final step is to create the private connection in the VMware Engine portal. You will need the following information to configure the private connection.

- Project ID (found under

Project infoon the console dashboard.)Project IDmay be different thanProject Name, so verify you are gathering the correct information. - Project Number (also found under

Project infoon the console dashboard.) - Name of the VPC (

network_namein yourvariables.tffile.) - Peered project ID from VPC Network Peering screen

Save all of these values somewhere handy, and follow these steps to complete peering

- Open the VMware Engine portal, and browse to

Network > Private connection. - Click

Add network connectionand paste the required values. Supply the peered project ID in theTenant project IDfield, VPC name in thePeer VPC IDfield, and complete the remaining fields. - Choose the region your VMware Engine private cloud is deployed in, and click

submit.

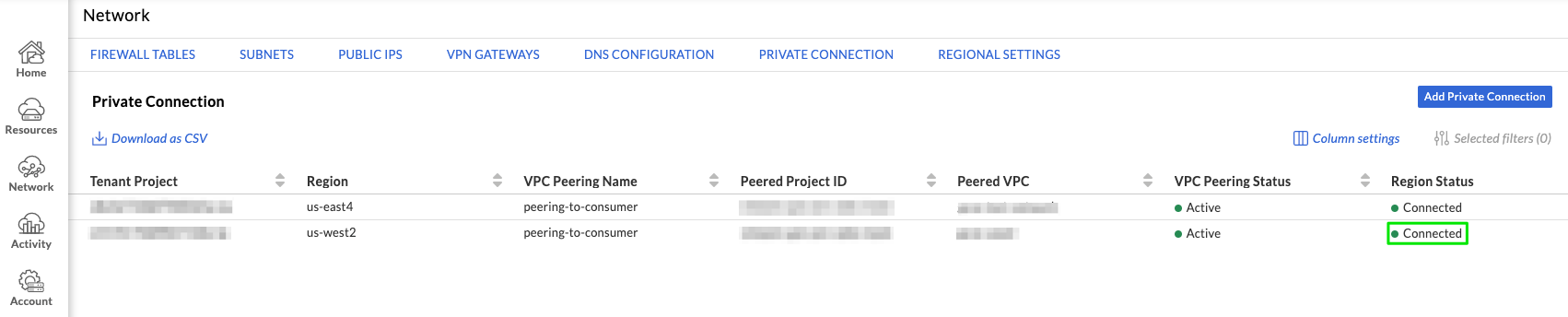

After a few moments, Region Status should show a status of Connected. Your VMware Engine private cloud is now peered with your Google Cloud VPC. You can verify peering is working by checking the routing table of your VPC.

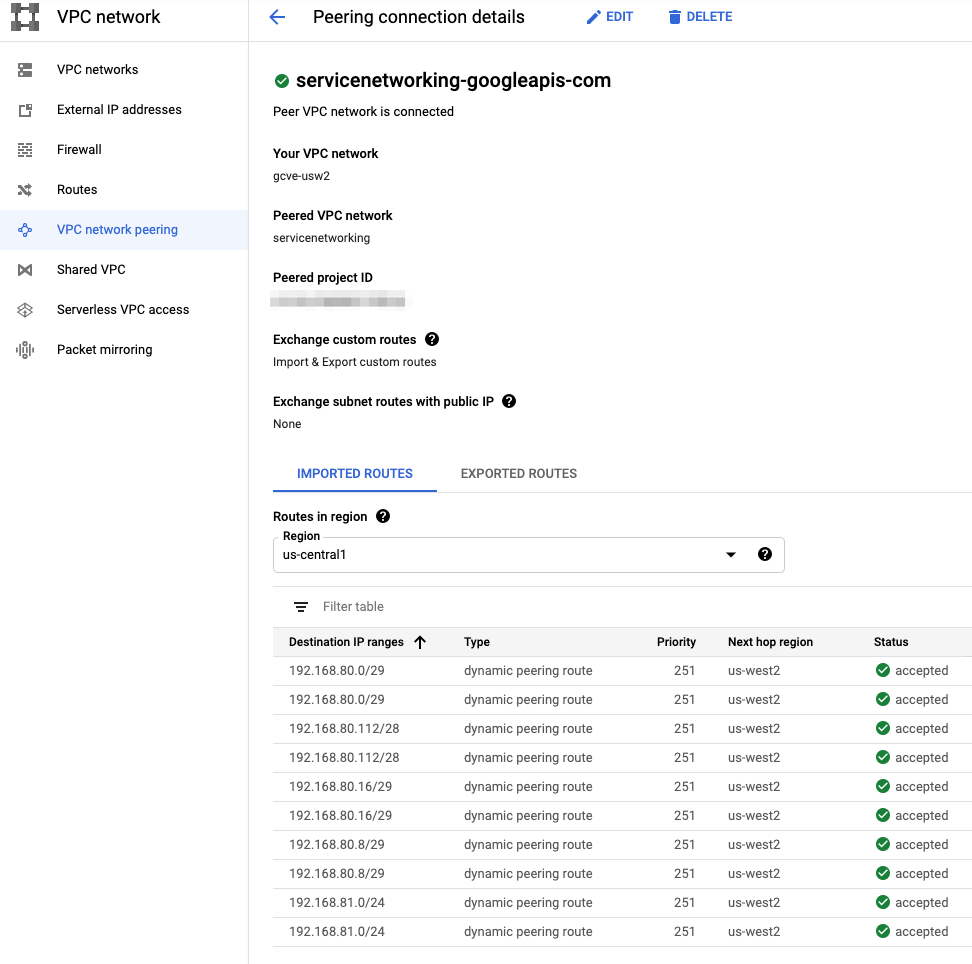

Verify VPC Routing Table

Once peering is completed, you should see routes for networks in your GCVE SDDC in your VPC routing table. You can view these routes in the cloud console or with:

gcloud couple networks peerings list-routes service-networking-googleapis-com –network=[VPC Name] –region=[Region name] –direction=incoming

Verifying routes with the gcloud cli

Verifying routes with the gcloud cli

Wrap Up

Well, that was fun! You should now have established connectivity between your VMware Engine SDDC and your Google Cloud VPC, but we are only getting started. My next post will cover creating a bastion host in GCP to manage your GCVE environment, and I may take a look at Cloud DNS as well.

This post comes at a good time, as Google has just announced several enhancements to GCVE, including multiple VPC peering. I’m planning on exploring these enhancements in future posts.